Automated image classification

Last week we demonstrated using image recognition services to help classify and describe images. The demo used two services: Google’s Vision AI, and Microsoft’s Cognitive Services. Both offer broadly similar feature sets:

- Tagging for subject matter

- Tagging faces within images

- Recognition and location of objects with an image

- Reading printed or handwritten text within images

- Selecting a portion of interest within an image (e.g. for creating more representative thumbnails)

Microsoft’s service offers one small feature that Google’s doesn’t: describing an image in a single sentence.

These classification services are completely generic – they have no specific domain knowledge, and so they can produce somewhat unexpected results. When a person looks at an image, there is typically associated context and meaning that the machine learning systems don’t know about.

For example when looking at a picture of dancers on a stage, they have no idea whether the dancers, their costumes, the shapes they make, the stage, the building it’s in, the country it occurred in, who the choreographer was, when it happened, etc, are of primary interest, so it simply tags everything with a confidence value relating to how strongly a tag matches the content.

Hits and misses

Microsoft plausibly describes this as “a group of people wearing costumes”, and provides a curious selection of tagged objects from this image: “person, grass, outdoor, people, group, holding, woman, standing, man, dressed, field, wearing, large, table, blanket, horse, walking, red, elephant, bear, stuffed, white, umbrella, crowd, playing, street”. S

ome of these are obvious, like “person” and “grass”, but others like “elephant”, ‘bear”, and “umbrella” are not – but they are at least tagged with low confidence values! We guessed that the drum skins were perhaps recognised as being animal-related. Also notable is the absence of “tree” and “building” from this list. All that said, these tags are a huge improvement over nothing at all, which is what we would have otherwise.

When generic recognisers work

Text and handwriting recognition generally works very well, even in cases where humans (me at least!) have difficulty:

Google read this as:

The Dunny copy consisted of 16 pages is and from keeping a scrap book of the letters we had received at the Star mit abtained a pretty good knowledge of that , the students themselver desired

Having extracted the text, it can then be indexed and searched for, providing a massive boost in visibility even if it’s not completely accurate.

Reduced scope for ambiguity

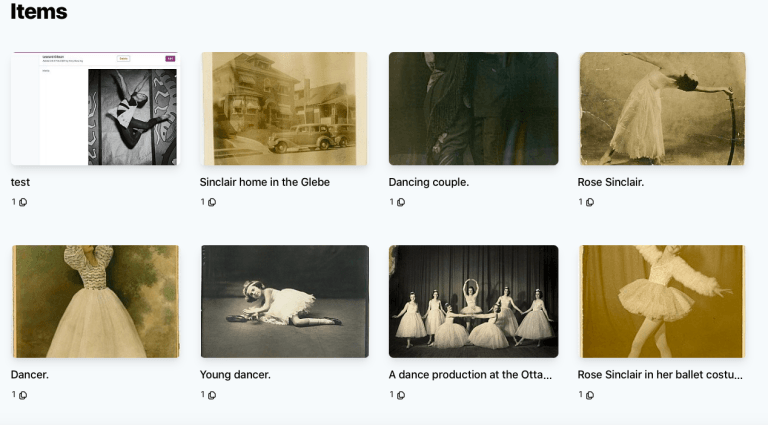

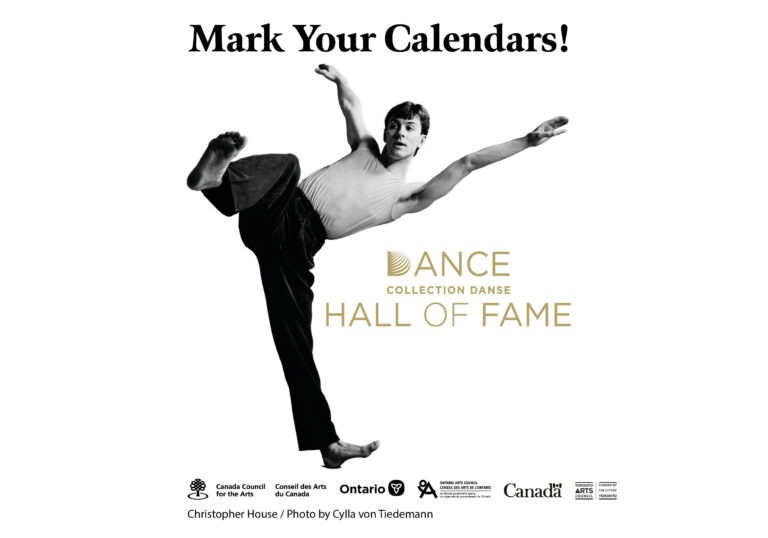

This image is much less complicated, and so produces fewer tags with higher confidence. Microsoft’s pretty accurate description is “a woman in a white dress”. Google tags this with “Performing arts, Dance, Dancer, Ballet, Athletic dance move, Ballet dancer, Choreography, Modern dance, Footwear, Leg”, all of which are impressively reasonable and useful.

During the demo, someone flagged that this is not in fact ballet, but contemporary dance – and that’s an excellent example of the limitations of this kind of generic recognition, and leads on to the next step in machine learning.

Building your own models

A generic recogniser is trained using very broad and unspecialised classification – so while it has no problem classifying this as a person and not a cup of tea, finer distinctions such as between dance genres are simply not there. But they can be.

Both Google’s and Microsoft’s services support the creation of your own classifiers – if you feed it enough dance-related images, and tell it what kind of genre they relate to, the recogniser will learn how to create such distinctions, and the more examples it has, the better it gets, and it happens surprisingly fast.

If you’ve ever encountered the much-hated “click all the images containing cars/traffic lights/buses/bicycles” CAPTCHA systems, you may not be surprised to learn that these are not only used to try to figure out whether you’re really human but also to train classifiers used for autonomous vehicle navigation – your human cognitive abilities are being used to train someone else’s machine learning models!

While this somewhat insidious use of your brainpower is not entirely for your benefit, volunteers can bring enormous value by crowdsourcing specialised knowledge. A great example of this is in the projects run by Zooniverse. Zooniverse’s first project, “Galaxy Zoo”, was in identifying galaxies vs stars from radio telescope data:

They have millions of these images; many of them look very, very similar, and distinctions between them are very subtle – humans have generally proved better at spotting patterns and shapes in them than the models have. So they use humans as both a primary classifying mechanism, but also to train machine learning models to improve automated classification.

Zooniverse has since gone on to make many more classification systems for subjects as diverse as manatee language, penguin migration, Australian transportees and ancient Egyptian literature, and their system is open-source, allowing anyone to build crowdsourced classification systems.

Accuracy

One concern that many raise about crowdsourcing is accuracy. You have little control over the crowd, and they can make different decisions, either because they are approaching the images in a different way, or just to be annoying!

When you have a high-volume, medium-quality classification system (what crowdsourcing typically gets you), you can feed it back into itself; don’t give too much weight to a single opinion; give the same image to multiple people and average out the classification, discard outliers, compare it to other images they have classified (e.g. how far away are they from average classifications?), and use other statistical tools to help improve accuracy.

For example, if 10 people say that an image is of a dancer, and one says it’s a cup of tea, you can probably safely ignore the cup of tea verdict altogether rather than allowing it to bias the data even slightly in the cup of tea direction. Another approach, which you can see in the Zooniverse example, is to restrict possible answers; you can imagine that left to their own devices, you’d get a vast variety of small variations on “fuzzy blobs on a dark background”, so ask very specific questions about it rather than leaving it open.

The choice of the questions you ask is important though, as it will have a direct influence of the capabilities of the model you train.

Bias in Machine Learning

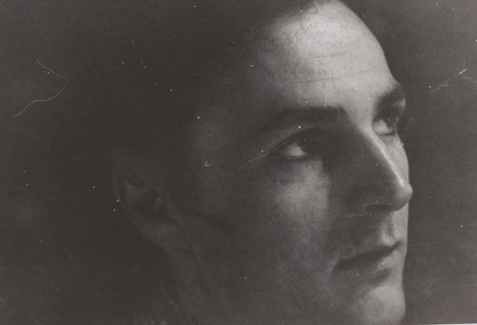

Depending on your audience can still be troublesome though, as even averaging can produce undesirable or biased outcomes. An excellent example of this came up this week when someone revealed an impressive demonstration of “depixelizing” via a machine learning system. Given a blurred/pixelized picture, the model generates face images that the original image could have come from. This is extremely clever, and technically impressive, but produced some rather unexpected outcomes, in particular, this one:

The results almost exclusively generated pictures of white people, or peculiar-looking white people with dark skin, even though the facial image data set it used is widely regarded as being reasonably diverse. It’s very difficult to avoid this kind of bias regardless of whether the classifiers are human or machine, but the machines will tend to follow or amplify human biases, as this talk goes further into.