Machine learning for DCD

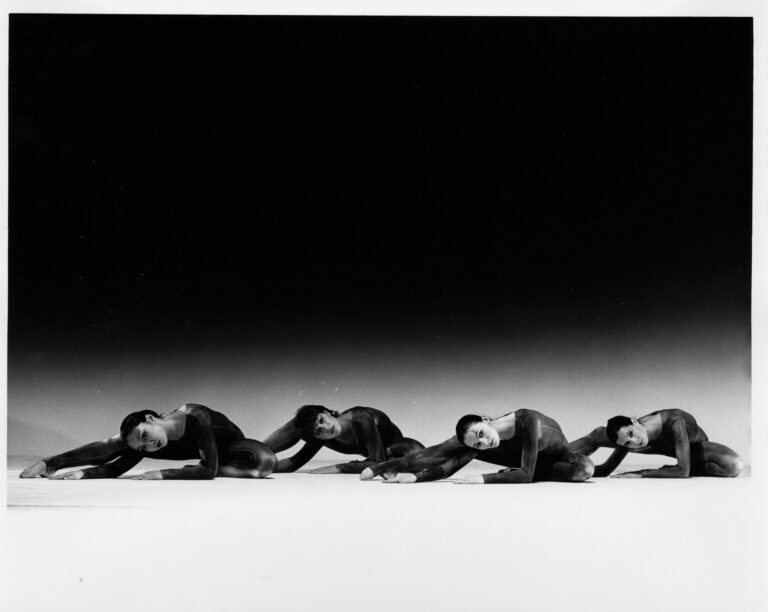

For the last few months we’ve been building a prototype system for storing and managing DCD’s collection. In recent years, all the cataloguing has been done manually into a simple online database. It’s organised and reliable, but its manual nature means that importing new content is arduous and labour intensive. To date, around 4,300 items have been catalogued – but there are hundreds of thousands of photos alone that have yet to be dealt with. Cataloguing all of them would take literally decades at this rate, and new material is probably being added faster than it’s being catalogued!

Computers are meant to make this stuff easier, right? But how?

Machine learning is a term that you’ve no doubt heard, and it’s been getting a lot of attention because it has so many interesting applications. The underlying algorithms are not hugely complex, but to be effective they need to process vast amounts of data, because before they start being useful, they spend a lot of time being completely useless! Advances in processing speed are what have made this possible at all, but how does it work?

Traditional programming goes something like this:

input + program = output

Machine learning turns this around:

input + output = program

The idea here is to feed the system a pile of arbitrary input, some desired output, and ask it to figure out a program that generalises the transformation from input to output. This generated program is called a model, and the process of creating it is referred to as training. Unfortunately this process requires vast amounts of (good) data and processing time to try to create a model that behaves the way that we want. Once you have the model program, you can use it in the traditional way – provide it with input and produce output.

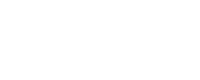

How does this work in practice? Say we have a huge “input” collection of miscellaneous dance images (this may sound familiar!), and we want to pick out images that are specifically of ballet. We provide a smaller set of human-selected images that are specifically of ballet (our target “output”) which we use to train the model. Hopefully this generates a model that can take images from our input set and tell us whether they belong to our output set, i.e. whether an image is of ballet or not. This process can have four possible outcomes:

- An image of ballet is recognised as ballet (true positive)

- A non-ballet image is classified as a non-ballet image (true negative)

- A ballet image is classified as non-ballet (false negative)

- A non-ballet image is classified as ballet (false positive)

The first two are what we want to see. 3 is not too bad as we might find that a future revision of our model correctly identifies unrecognised images. 4 is what we want to avoid, because it’s effectively contaminating our learning process, and may cause other images to be misclassified. An important step is to use these false results to act as feedback – if we add those false results into our training sets of ballet and not-ballet images, we can retrain the model so that it can get better at it next time. Machine learning is all about learning from mistakes, because it makes an awful lot of them!

That’s a very simple example, and we are only asking our recogniser to resolve a simple binary yes/no choice. We are fairly likely to be able to generate a model that works quite well, but isn’t terribly useful. Now imagine that we want to distinguish between 100 different dance genres. This is a lot more useful, but is a lot harder, mainly on the human side of things: we might have trouble with these distinctions too. In practice, the hard part is coming up with good data on which to train the model – the less well-defined your training data, the less accurate the results will be. This is the point at which bias tends to creep into machine learning models.

What if we take this to its logical conclusion and say we want the model to be able to recognise anything at all? That’s clearly a very difficult thing to do, but it’s been (mostly) done. Who has access to billions of images coupled to reasonably accurate contextual information about them? Search engines. Google, Microsoft, and others have a vast library of imagery and context in the form of the entire web. The accuracy of the data that’s available is not high, but you can make up for this statistically by having mind-bogglingly huge volumes of images and context to work with, so individual anomalies will (you hope!) average out. These same companies have the enormous computing resources required to train models that are sufficiently good to make a reasonable go of trying to recognise more or less anything that people have ever taken photos of; there is an enormous difference between asking “is this a picture of ballet?” and “what is this a picture of?”.

The generic models that they have trained are available for anyone to use via public, web-based application programming interfaces, or APIs. We can build an HTML form that allows us to upload an image and ask them to try to recognise what’s in it. This is really useful if you’re trying to add some useful metadata to a huge collection of images, which is coincidentally exactly what we’re trying to do. The models they use are pretty impressive, and allow us to add vital searchable information to what would otherwise be a featureless ocean of images.

But it’s still a compromise. Such models might reliably identify someone in a picture as a dancer, but might have trouble doing any more than that. These generic models can equally recognise pictures of moose, violins, or staplers, which is nice, but not that useful to DCD.

All this leads us to two objectives:

- Use generic machine learning models to populate general information about media items with minimal human intervention to help us make the DCD archive more easily searchable.

- Use the knowledge in members of the dance community to help train custom models to allow us to make identification and classification of DCD material more precise.

And that’s what we are aiming to do.